Abstract

Recent text-to-image (T2I) generation models have demonstrated impressive capabilities in creating images from text descriptions. However, these T2I generation models often fall short of generating images that precisely match the details of the text inputs, such as incorrect spatial relationship or missing objects. In this paper, we introduce SELMA: Skill-Specific Expert Learning and Merging with Auto-Generated Data, a novel paradigm to improve the faithfulness of T2I models by fine-tuning models on automatically generated, multi-skill image-text datasets, with skill-specific expert learning and merging. First, SELMA leverages an LLM's in-context learning capability to generate multiple datasets of text prompts that can teach different skills, and then generates the images with a T2I model based on the prompts. Next, SELMA adapts the T2I model to the new skills by learning multiple single-skill LoRA (low-rank adaptation) experts followed by expert merging. Our independent expert fine-tuning specializes multiple models for different skills, and expert merging helps build a joint multi-skill T2I model that can generate faithful images given diverse text prompts, while mitigating the knowledge conflict from different datasets. We empirically demonstrate that SELMA significantly improves the semantic alignment and text faithfulness of state-of-the-art T2I diffusion models on multiple benchmarks (+2.1% on TIFA and +6.9% on DSG), human preference metrics (PickScore, ImageReward, and HPS), as well as human evaluation. Moreover, fine-tuning with image-text pairs auto-collected via SELMA shows comparable performance to fine-tuning with ground truth data. Lastly, we show that fine-tuning with images from a weaker T2I model can help improve the generation quality of a stronger T2I model, suggesting promising weak-to-strong generalization in T2I models.

Method

Illustration of the four-stage pipeline of SELMA. (a) Prompt Generation: Given a short skill description and a few (i.e., three) seed examples about a specific skill, we generate prompts to teach the skill with an LLM, while maintaining prompt diversity via text-similarity based filtering. (b) Image Generation: Given the LLM-generated text prompts, we generate training images with a T2I model. (c) Skill-Specific Expert Learning: We learn skill-specific expert T2I models based on LoRA fine-tuning. (d) Merging Expert Models: We obtain a multi-skill T2I model by merging the skill-specific LoRA parameters.

Generation Examples

SELMA helps improve SDXL in various skills, including counting, text rendering, spatial relationships, and attribute binding.

Experiments

SELMA vs. Different Alignment Methods for T2I Generation

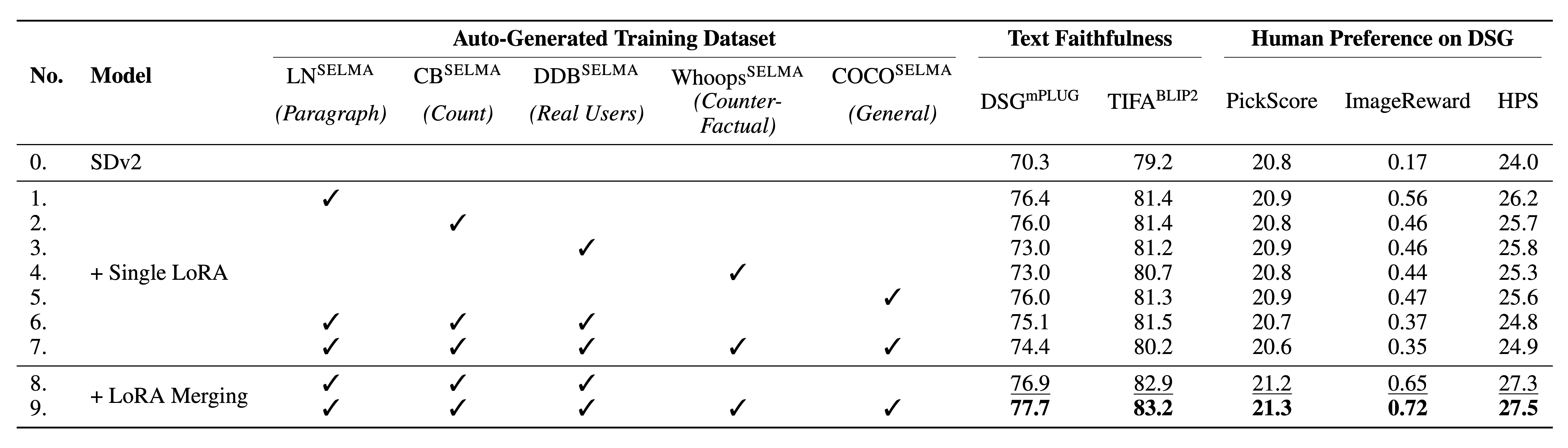

Effectiveness of Learning & Merging Skill-Specific Experts

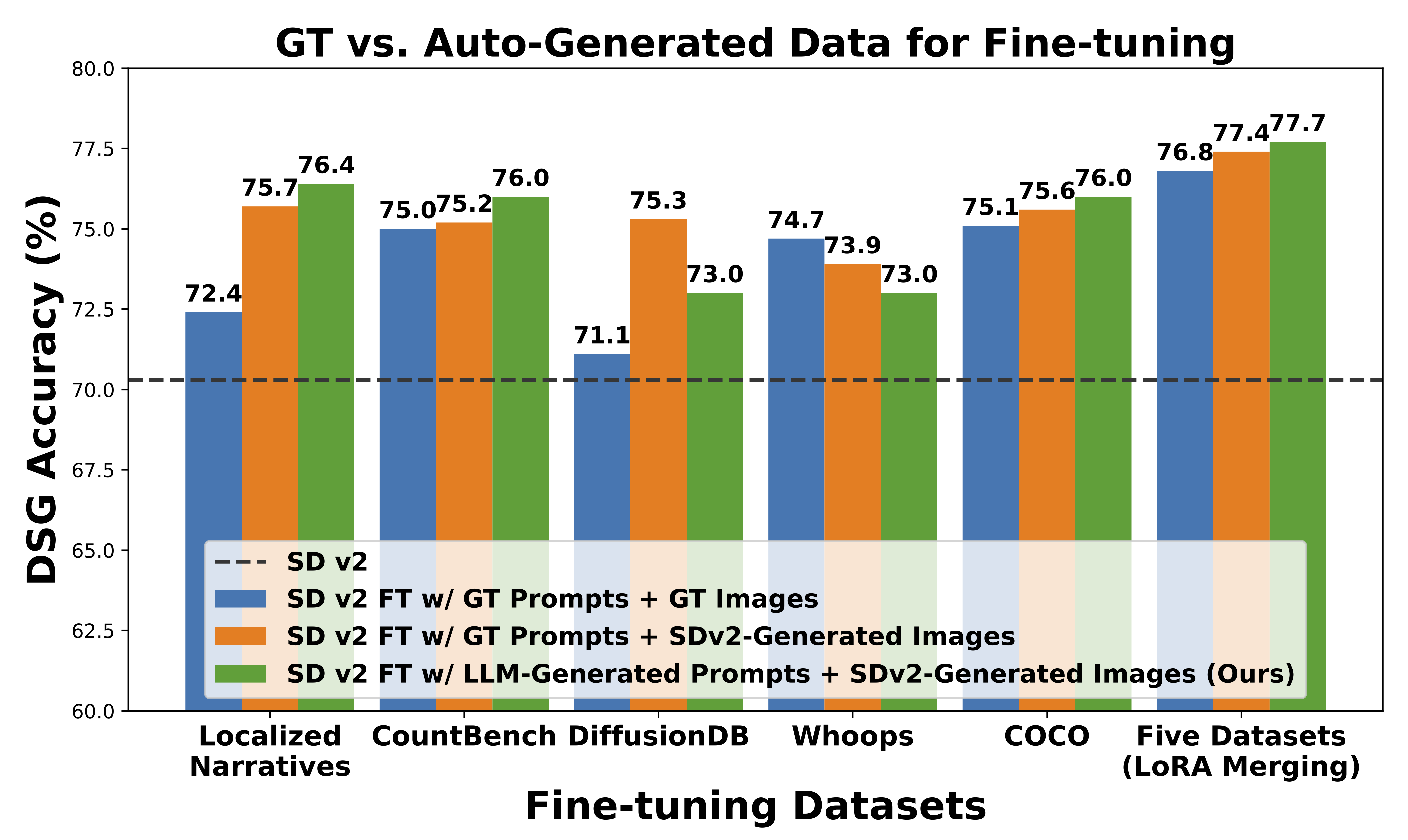

Effectiveness of Auto-Generated Data

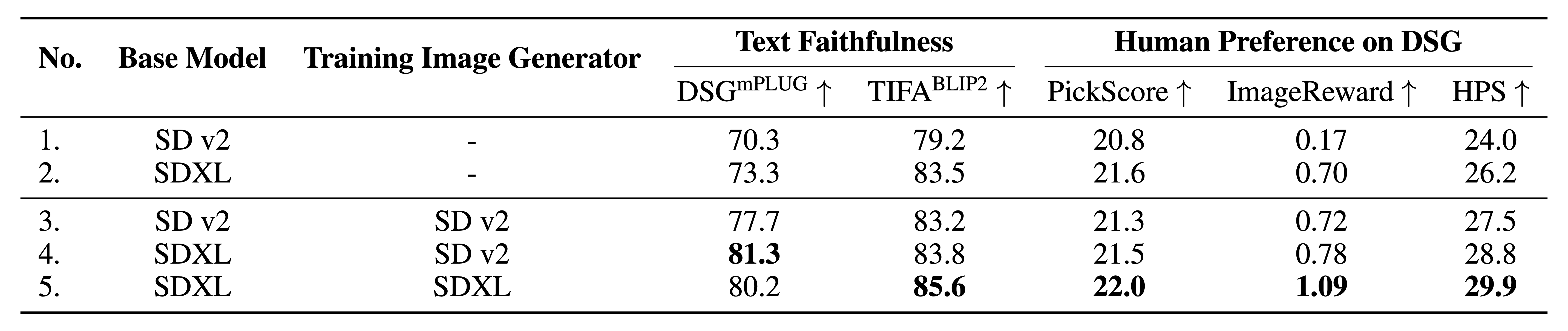

Weak to Strong Generalization

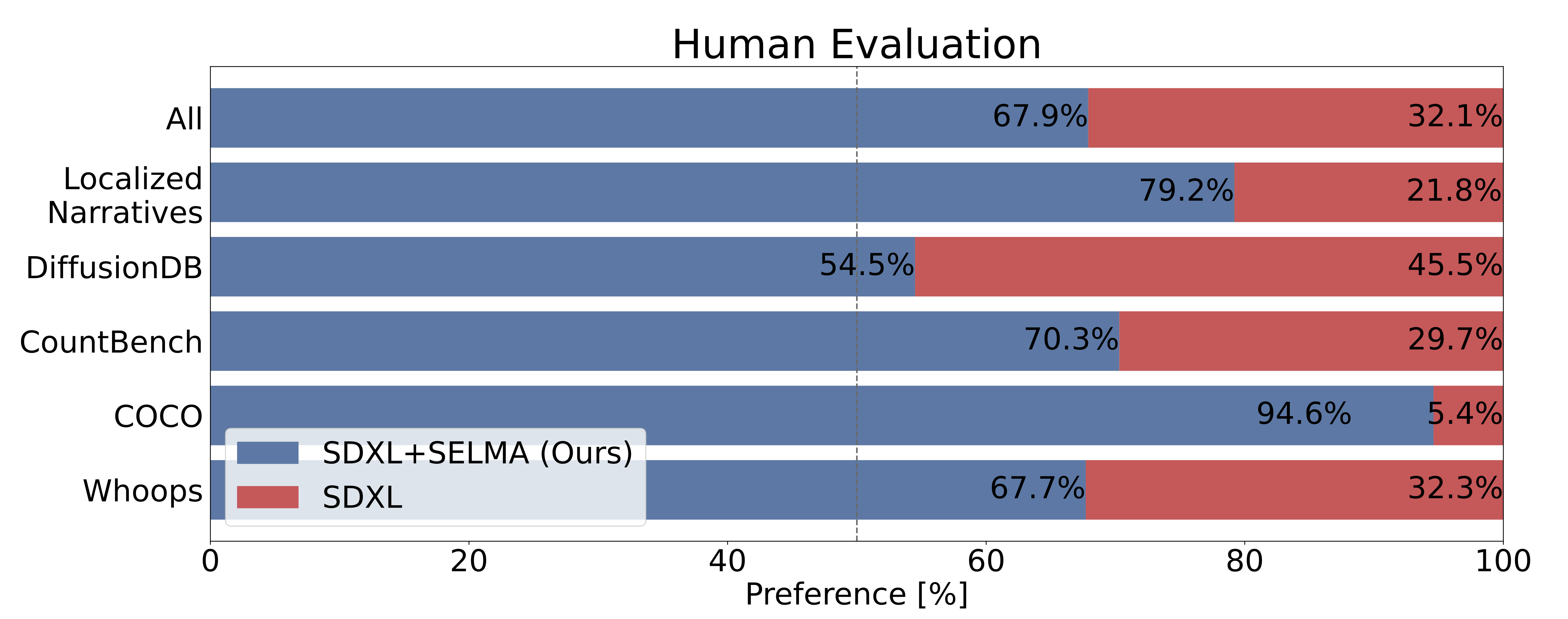

Human Evaluation

BibTeX

@article{li2024selma,

author = {Jialu Li*, Jaemin Cho*, Yi-Lin Sung, Jaehong Yoon, and Mohit Bansal},

title = {SELMA: Learning and Merging Skill-Specific Text-to-Image Experts with Auto-Generated Data},

journal = {arxiv},

year = {2024},

url = {https://arxiv.org/abs/2403.06952}

}